xCAT Myrinet HOWTO

The purpose of this document is to describe the setup of Myrinet. This document assumes familiarity with xCAT 1.2.0 and the Building a Linux HPC Cluster with xCAT Redbook.

xCAT's method of setting up Myrinet is very similar to default method as documented in Myrinet's README-linux, with the following exceptions:

What is Myrinet?

Prepare Kernel Tree (for 2.4 kernels)

Install the matching kernel source for the running kernel.

Create .config

For SuSE type:

cd /usr/src/linux

make mrproper

gunzip -c /proc/config.gz >.config

For Red Hat type:

cd /usr/src

ln -s linux-2.4 linux

cd /usr/src/linux

make mrproper

cp configs/(matching config) .config

Edit Makefile and

validate that:

VERSION = 2

PATCHLEVEL = 4

SUBLEVEL = 19

EXTRAVERSION = -SMP (SuSE leave blank)

equals uname -r, e.g.:

2.4.19-SMP

Prepare kernel. Type:

make oldconfig

make dep

make modules (ctrl-c after 30 seconds)

NOTE: For SuSE SLES8 ppc64 you must install all the *cross* RPMs

except gcc-core, then type:

chmod 755 make_ppc64.sh

./make_ppc64.sh oldconfig

./make_ppc64.sh dep

./make_ppc64.sh modules (ctrl-c after 30 seconds)

Enjoy your prepared kernel tree.

Prepare Kernel Tree (for 2.6 kernels)

Install the matching kernel source for the running kernel.

Create .config

For SuSE type:

cd /usr/src/linux

make mrproper

gunzip -c /proc/config.gz >.config

For Red Hat type:

cd /usr/src

ln -s linux-2.6* linux

cd /usr/src/linux

make mrproper

cp configs/(matching config) .config

Edit Makefile and

validate that:

VERSION = 2

PATCHLEVEL = 6

SUBLEVEL = 5

EXTRAVERSION = -SMP (SuSE do nothing)

equals uname -r, e.g.:

2.4.19-SMP

Prepare kernel. Type:

make oldconfig

make prepare-all

make all

Enjoy your prepared kernel tree.

Build GM RPM

Download from

ftp://ftp.myri.com/pub/GM

gm-1.5.2.1_Linux.tar.gz,

gm-1.6.*.tar.gz, gm-2.0.*_Linux.tar.gz, or gm-2.1.*_Linux.tar.gz and save to /tmp.

For GM <= 1.5.* type (RH

support only):

cd /tmp

$XCATROOT/build/gm/gmmaker (gm tarball)

E.g.:

$XCATROOT/build/gm/gmmaker

gm-1.5.2.1_Linux.tar.gz

For GM 1.6.* type (RH and SuSE

support only):

cd /tmp

$XCATROOT/build/gm-1.6/gmmaker (gm tarball)

E.g.:

$XCATROOT/build/gm-1.6/gmmaker

gm-1.6.3_Linux.tar.gz

For GM 2.0.* type (RH and SuSE

support only):

cd /tmp

$XCATROOT/build/gm-2.0/gmmaker (gm tarball)

E.g.:

$XCATROOT/build/gm-2.0/gmmaker

gm-2.0_Linux.tar.gz

For GM 2.1.* type (RH and SuSE

support only):

cd /tmp

$XCATROOT/build/gm-2.1/gmmaker (gm tarball)

E.g.:

$XCATROOT/build/gm-2.1/gmmaker

gm-2.1_Linux.tar.gz

After the RPM is built note the location of the RPM.

Define Myrinet Mapper

GM 2.0.x/2.1.x:

Define a comma delimited list of hosts that will run the active

mapper as mapperhost in

$XCATROOT/etc/site.tab, e.g.:

mapperhost head01,head02

NOTE: Version 2.0.x does not support static maps.

mapperhost must be defined.

GM < 2.0.x:

Define a single host that will run the static mapper as mapperhost in

$XCATROOT/etc/site.tab or leave as NA.

The static mapper will run on the first Myrinet host in the

noderange passed to

makegmroutes (see Build

Static Routes below), e.g.:

mapperhost head01

or

mapperhost NA

NOTE: Versions < 2.0.x support active maps, however xCAT

does not. If you want to use active maps with versions < 2.0.x, then read

the Myrinet documentation. Dynamic maps have not been very stable with

versions < 2.0.x.

Define Myrinet IP addresses

For each Myrinet node define a CLASS B address using the

node name suffixed with -myri0 in

/etc/hosts, e.g.:

172.20.100.1 node001-myri0

172.20.100.2 node002-myri0

NOTE: You can use a different subnet if defined in

$XCATROOT/etc/site.tab as

myrimask, e.g.:

myrimask 255.255.255.0

Type:

makedns

Install GM RPM on each node

For each node copy the RPM and install with

rpm -i

or

Copy to $INSTALLDIR/post/kernel ($INSTALLDIR

is defined in $XCATROOT/site.tab)

and setup a $XCATROOT/etc/postscripts.tab

rule to install and setup Myrinet on post install, e.g.:

NODERANGE=myri {

myrinet 1.6.3_Linux 2.4.19-SMP

}

or

TABLE:noderes.tab:$NODERES:$noderes_gm=Y and OSVER=sles8 {

myrinet 1.6.3_Linux 2.4.19-SMP

}

The myrinet post install script

takes two arguments: GM Version and Kernel Version.

Non Myrinet nodes that are used for development should also have

the same RPM installed, however turn off the

gm service:

chkconfig -d gm (SuSE)

or

chkconfig --del gm (Red Hat)

Build Static Routes (GM Version < 2.0 only)

From the management node type:

makegmroutes noderange

where noderange is the list of nodes that contain Myrinet adapters.

Read the noderange.1 man page for

information on crafting a noderange. You will be prompted to save

the routes for reinstalls. Default is 'y'.

Test

Check routes:

psh noderange $XCATROOT/sbin/gmroutecheck

noderange

No news is good news.

Check IP, ssh to first

Myrinet node and type:

pping -i myri0 noderange

Then type:

ppping -i myri0 noderange

No news is good news.

Clear counters and run GM stress:

psh noderange /opt/gm/bin/gm_counters

-C

pgmstress noderange

1024

This will start the gm_stress test

program on all nodes. You should now be able

to look at the Myrinet switches and see all of the green lights blinking.

After gm_stress has run awhile, you can

look for problems by:

psh noderange

/opt/gm/bin/gm_debug | grep bad

If any of the counters are a non-zero that node is worthy of further testing.

NOTE: If you kill pgmstress you will need to run:

psh noderange killall gm_stress

Run mute check for bad cables, adapters, and ports.

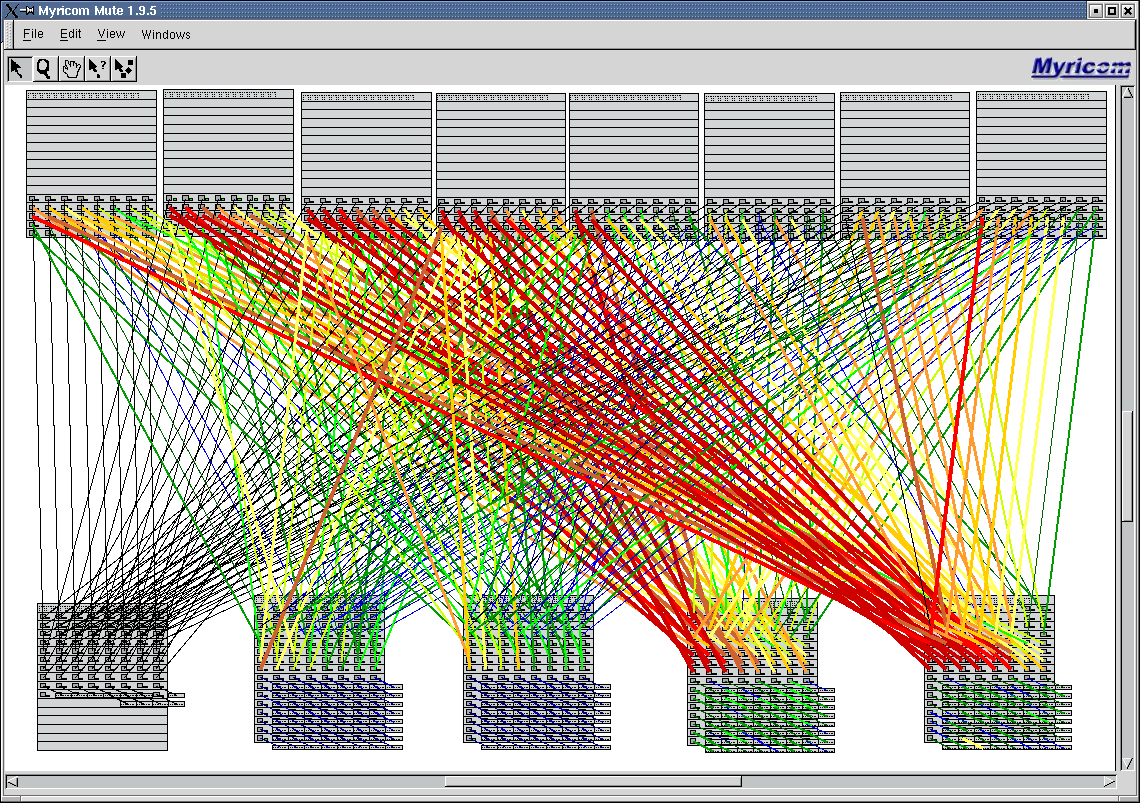

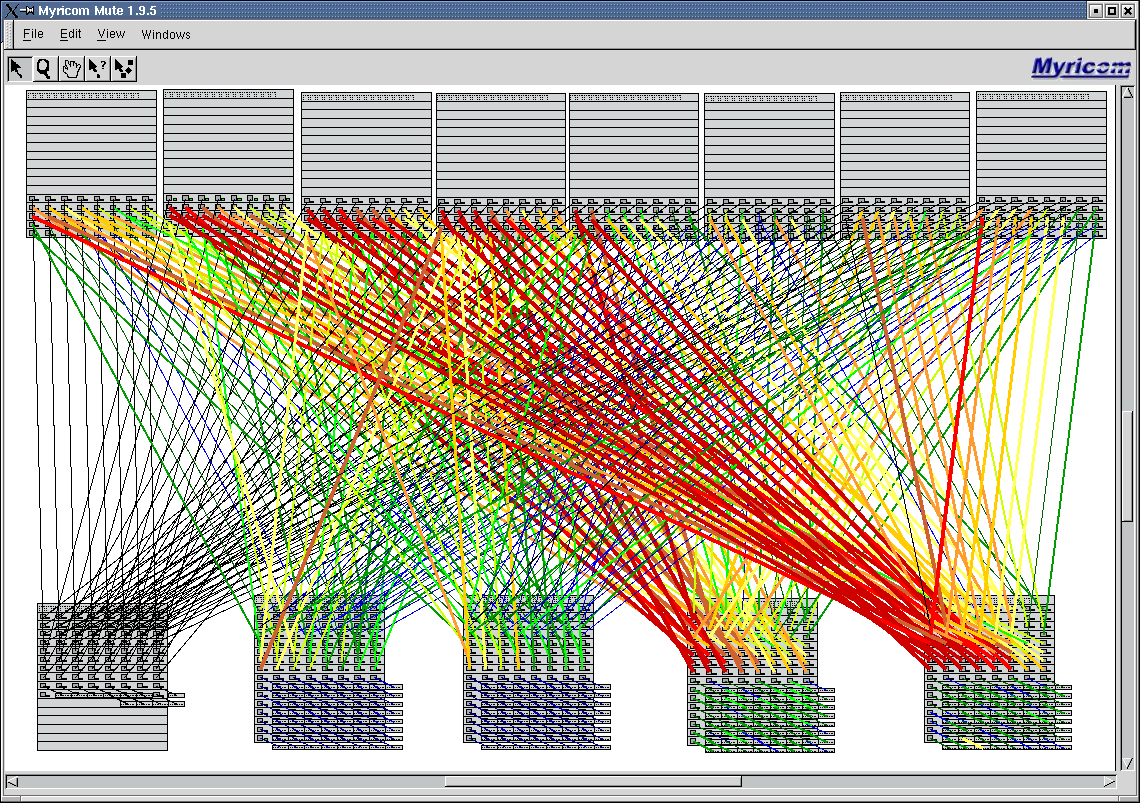

Mute

Mute is a Myrinet tool to analyze hardware

problems while gm_stress is running.

http://www.myri.com for details on obtaining,

building,

and running Mute.

Obtain m3-dist.tar.gz

and mute-1.9.5.tar.gz, place in

/tmp and then type on the same node

you ran gmmaker:

/opt/xcat/build/gm-1.6/mutemaker m3-dist.tar.gz mute-1.9.5.tar.gz

OR

/opt/xcat/build/gm-2.0/mutemaker m3-dist.tar.gz mute-1.9.5.tar.gz

After build, create an EMPTY directory, then create the file mute.switches in the newly created directory and enter one line for each Myrinet switch IP, then run mute from that directory.

NOTE:

You must run mute from a host the can ping the Myrinet Ethernet management interfaces AND has a Myrinet adapter installed.

GM 2.0.x/2.1.x NOTES:

Before running mute

type on the node you plan to run mute on:

cd directory with

mute.switches file

killall gm_mapper

/opt/gm/sbin/gm_mapper -v --level=10 --pause --map-file-0=mute.map

...

14 3,-7,1,7

h6 3,-7,2,7

h31 -5,-12,6 <------- computing routes

h23 -5,-10,6

h15 3,-6,-7,7

h7 3,-6,-6,7

h39 3,-6,-5,7

map version is now 1929343636

h0 checking hosts <------- entering verify

checking host h1 mode

checking host h2

checking disconnected link -15 on x0

checking for new hosts on x0

map version 0:60:dd:7f:3b:bf 1929343636

43 hosts and 17 xbars

I am h0

verifying again

h0 checking hosts

checking host h1

checking host h2 <-------- control-C anywhere

around here's fine

Mute Q&D:

Click Build (Expect uninterpretable looping output, make coffee)

Click Close (After Build)

Click View/Reset Switches

Click View/Update Counters (takes a little time)

Click Windows/Counters

Click Start

Run pgmstress or other tests

Click Stop/Close

Click View/Update Counters (take a little time)

Click View/Bad Packets

Click Windows/Info

For each port with bad packets use the ?-> (? pointer) to click on the Myrinet port for info. Very low bad CRCs (e.g. single digit) is OK.

Illustration: mute monitoring pgmstress.

Support

Egan Ford

egan@us.ibm.com

January 2005

Dan Cummings

dcumming@us.ibm.com

December 2002